I briefly mentioned the HoloToolkit for Unity before in my article Fragments found my couch, but how? The toolkit is an amazing bit of open source code from Microsoft that includes a large set of MonoBehaviors that make it easier to build HoloLens apps in Unity.

The toolkit makes it easy to support speech commands through the use of KeywordManager (or if you’d like more direct control you can use the SDK class KeywordRecognizer directly). But one of the features I found glaringly missing from the docs and the toolkit was the ability to generate speech using Text to Speech.

The Universal Windows Platform (UWP) offers the ability to generate speech using the SpeechSynthesizer class, but the main problem with using that class in Unity is that it doesn’t interface with the audio device directly. Instead, when you call SynthesizeTextToStreamAsync the class returns a SpeechSynthesisStream. In a XAML application you would then turn around and play that stream using a MediaElement, but in a pure Unity (non-xaml) app we don’t have access to MediaElement.

I asked around on the Unity forums and Tautvydas Zilys was kind enough to point me in the right direction. He suggested I somehow convert the SpeechSynthesisStream to a float[] array that could then be loaded into an AudioClip. This worked, it was just harder than I expected.

Technical Details

I’ll explain how the work was done here for those who would really like to dig into the technical interop between Unity and the UWP. If you’re only interested in using Text to Speech in your HoloLens application, feel free to jump down to the section Using TextToSpeech.

Audio Formats

The data in the stream returned by SpeechSynthesizer basically contains an entire .WAV file, header and all. But in order to dynamically load audio at runtime into Unity, this data needs to be converted into an array of floats that range from -1.0f to +1.0f. Luckily I found an excellent sample provided by Jeff Kesselman in the Unity forums that showed how to load a WAV file from disk into the float[] that Unity needs. I removed the ability to load from disk and focused on the ability to load from a byte[]. Then I added a method called ToClip that would convert the WAV audio into a Unity AudioClip. That code became my Wav class, which you can find on GitHub here.

The main code I added to convert the WAV data to an AudioClip is:

public AudioClip ToClip(string name)

{

// Create the audio clip

var clip = AudioClip.Create(name, SampleCount, 1, Frequency, false); // TODO: Support stereo

// Set the data

clip.SetData(LeftChannel, 0);

// Done

return clip;

}

Stream to Bytes to WAV

Now that I had the ability to load WAV data and convert it to an AudioClip, now I needed a way to convert the SpeechSynthesisStream to a byte[]. This wasn’t overly complex but it did involve some intermediary classes.

First, you need to call GetInputStreamAt to access the input side of the SpeechSynthesisStream. That gives you an IInputStream which can be passed to a DataReader. From there, finally, we can call ReadBytes to read the contents of the stream into a byte[].

// Get the size of the original stream

var size = speechStream.Size;

// Create buffer

byte[] buffer = new byte[(int)size];

// Get input stream and the size of the original stream

using (var inputStream = speechStream.GetInputStreamAt(0))

{

// Close the original speech stream to free up memory

speechStream.Dispose();

// Create a new data reader off the input stream

using (var dataReader = new DataReader(inputStream))

{

// Load all bytes into the reader

await dataReader.LoadAsync((uint)size);

// Copy from reader into buffer

dataReader.ReadBytes(buffer);

}

}

// Load buffer as a WAV file

var wav = new Wav(buffer);

Threading and Async

The final hurtle was the biggest and it was due to the differences between the UWP threading model and Unity’s threading model. Many UWP methods follow the new async and await pattern which is extremely helpful to developers when building multi-threaded applications, but isn’t supported by Unity. In fact, Unity is very much a single-threaded engine and it uses tricks like coroutines to try and simulate support for multi-threading. This is where things get a little hairy.

TextToSpeech has a method called Speak, but Speak cannot be marked async since Unity doesn’t understand async. Inside this method, however, we need to call other UWP methods that need to be awaited. Methods like SpeechSynthesizer.SynthesizeTextToStreamAsync.

The way we work around this is to have the Speak method return void, but inside the Speak method we spawn a new Task with an inline async anonymous function. That sounds really complicated, but the words in that sentence are probably more complicated to type than the actual code. Here’s what it looks like:

// Need await, so most of this will be run as a new Task in its own thread.

// This is good since it frees up Unity to keep running anyway.

Task.Run(async ()=>

{

// We can do stuff here that needs to be awaited

}

As the comments above call out, the good news here is that anything running inside that block will run on it’s own thread which leaves the Unity thread completely free to continue rendering frames (a critically important task for any MR/AR/VR app).

The last “gotcha” comes when the speech data has been converted and it’s time to hand it back to Unity. Remember, Unity is single-threaded only and Unity classes must be created on Unity’s thread. This is true even of classes that don’t do any rendering, like AudioClip. If we try to create the AudioClip inside that Task.Run code block above, we will be creating it in a Threadpool thread created by UWP. This will fail with an error message in the Unity Error window explaining that an attempt was made to create an object outside the Unity thread. But we can’t put the code outside of the Task.Run block either because anything outside of the Task.Run block will run in parallel with code inside the block! We would be trying to create the AudioClip before speech was even done generating!

So we need to be able to run something on Unity’s thread, but we need to be able to do it inside the Task.Run block, and that can be accomplished by an undocumented helper method called UnityEngine.WSA.Application.InvokeOnAppThread. That helper method takes another anonymous block of code and will schedule that to run back on Unity’s main thread. It looks like this:

// The remainder must be done back on Unity's main thread

UnityEngine.WSA.Application.InvokeOnAppThread(()=>

{

// Convert to an audio clip

var clip = wav.ToClip("Speech");

// Set the source on the audio clip

audioSource.clip = clip;

// Play audio

audioSource.Play();

}, false);

Using TextToSpeech

If all that code above sounds confusing, don’t worry! We’ve made it super simple for you. You can simply download the TextToSpeech.cs file here.

Once you’ve got the two scripts, here’s what you need to do in order to get speech working:

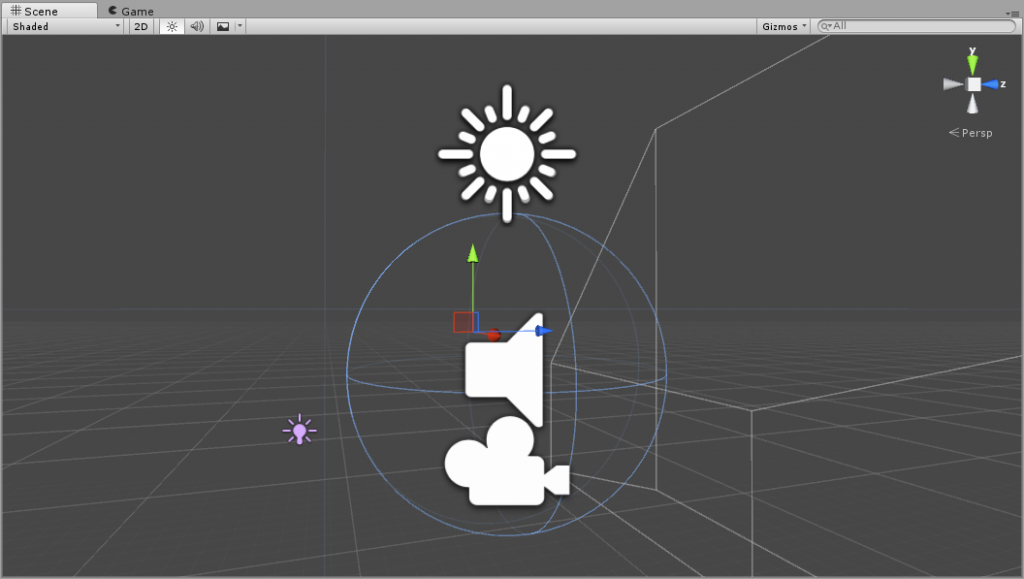

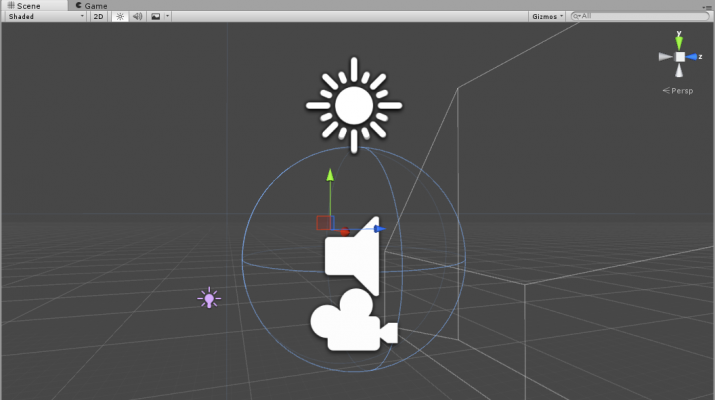

- Create an AudioSource somewhere in the scene. I recommend creating it on an empty GameObject that is a child of the Main Camera and is positioned about 0.6 units above the camera.

Wherever you place this AudioSource is where the sound of the voice will come from. Placing it 0.6 units above the camera and having it be a child of the camera means you’ll always hear the voice and the voice seems to come from a similar location as it does when hearing Cortana in the OS.

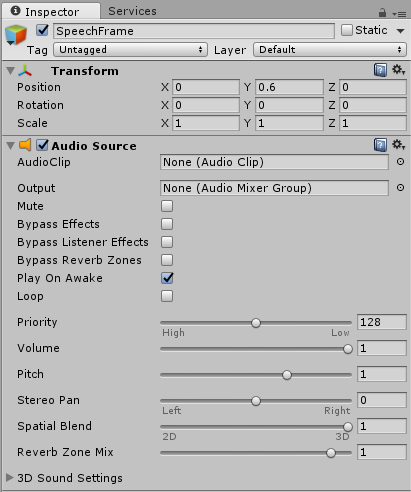

Wherever you place this AudioSource is where the sound of the voice will come from. Placing it 0.6 units above the camera and having it be a child of the camera means you’ll always hear the voice and the voice seems to come from a similar location as it does when hearing Cortana in the OS. - Recommended: Configure the AudioSource to have a Spatial Blend of full 3D.

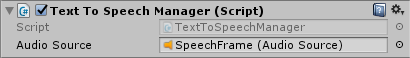

- Add a TextToSpeech component anywhere in your scene and link (drag and drop) the audio source you created in the last step to link it with the manager.

- Anywhere in code, call TextToSpeech.Speak(“Your Text Here!”);

Known Issues

Here are the current known issues:

- Big one: Speech is not supported in the editor! Sorry, text to speech is a UWP API and therefore does not work in the Unity editor. You’ll see warnings in the debug window along with the text that would have been spoken.

Great article. Thank you for posting this and submitting a pull request to the Toolkit.

Fantastic! I’m using it well, and it easy to implement.

Is there a method to stop talking?

The TextToSpeech component is now part of the Mixed Reality Toolkit. You can find it here. Since TextToSpeech uses a Unity AudioSource you can use AudioSource.Stop to stop the speech.

Well written article! All the git hub links are dead though. Any chance they could be updated?

The TextToSpeech component is now part of the Mixed Reality Toolkit. You can find it here.

Hi,

I cannot buikd this example.

SCS0117 ‘TextToSpeechManager’ does not contain a definition for ‘Instance’

The .Instance property was from when I was originally treating TextToSpeech as a singleton. Before adding it to the toolkit we decided NOT to treat it as a singleton because you may want multiple subjects speaking and with different voices. The TextToSpeech component is now part of the Mixed Reality Toolkit. You can find it here.

Hi Jared, great- I was looking for just this. However, the links to the files TextToSpeechManager.cs and Wav.cs are both yielding a 404 error. Also the same for the github toolkit. Could you pls send me the files to my email id or give me another link where these exist? Thanks!

Hey Nischita. The TextToSpeech component is now part of the Mixed Reality Toolkit. You can find it here.

Great! Thanks Jared.

Is it still true that Speech is not supported in the editor? Also, TextToSpeechManager does not exist anymore, it’s just TextToSpeech. Do we need to include any header to use this? Thanks

TextToSpeech is now part of the Mixed Reality Toolkit here. It still does not work within the Unity Editor because it requires an API that is not supported in Unity at design time. Instead you’ll see a message in the log window that reports what would have been said.